With the latest science fiction thriller Ex Machina hitting our screens this month, we ask two of the film’s advisors Adam Rutherford and Murray Shanahan: When Will Robots Rule The World?

Professor Stephen Hawking recently warned that artificial intelligence “could spell the end of the human race”.

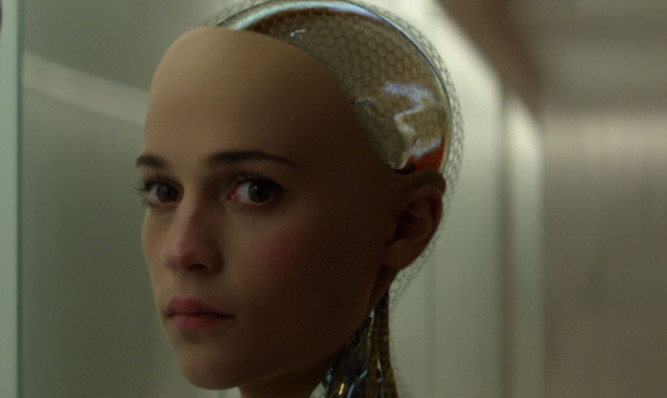

It’s concerning subject matter tackled in Ex Machina, a new movie starring Domnhall Gleeson and rising star Alicia Vikander.

In the film, a scientist puts his new invention, an artificially intelligent robot called Ava, to The Turing Test a trial used in science to see if a human can tell if they are interacting with a computer or not.

But trouble comes when Ava tries to rope the human visitor in to helping her escape her laboratory confine and walk among us.

Scientists Murray Shanahan and Adam Rutherford are both experts in the field and acted as advisors on the film.

They sat down with us to tell us The Honest Truth about artificial intelligence, what it will mean for our short-term future and whether, in the long term, we really will be slaves to our robot masters.

If Stephen Hawking is correct, how long has the human race got left?

Murray Shanahan: I think a good long while. I don’t think there’s any real prospect of us producing anything like Ava in the next 10 years or so.

Within the next 100 years there’s actually quite a good chance we will produce something to the level of human intelligence but then the question becomes will it be friendly or hostile.

That is down to AI researchers and the companies involved. But it’s not around the corner.

Why has there suddenly been a lot about artificial intelligence in the news?

MS: There have been statements from people like Elon Musk (who donated £6.5m to AI research) and Stephen Hawking which give the impression that it’s something that’s about to happen, which it’s not.

Then there is the work of Nick Bostrom, who has written at length about what the consequences of human level AI could be, and describes various scenarios where it could go horribly wrong.

But what doesn’t always come out in the media is the timescales involved. Nick Bostrom himself says it will be 100 years before we’re dealing with human level artificial intelligence.

If developing AI could spell our doom, why develop it?

Adam Rutherford: AI means different things. There’s a massive incentive to create autonomous, thinking machines that can live in parallel with us and can contribute and enrich our society, particularly with things like self-driving cars and in terms of processing data.

MS: Right now there is a huge amount of investment in AI start-ups as people see this technology having a huge economic impact in the near future. In the next 10 years I think we are going to see more and more specialist AI.

Why do people have an inherent distrust of scientists?

AR: The stereotype is problematic. Not that long ago, a survey was carried out among children in the UK where they were asked to draw a scientist.

And almost always they came up with a white, middle-aged man, with Einstein crazy hair, a white coat, and maybe a lab rat in his pocket or a row of neatly aligned Biros.

Now those people do exist within science but in molecular biology, the field I have worked in, it’s at least 50% female. Most scientists are regular people.

They are more like Ross from Friends than Doc Brown from Back to the Future. A bit drippy, a bit rubbish with women and hang around cafes. That’s the life of a scientist.

Is there an increasing danger people will get left behind?

MS: I do have concerns about this. I still use an analogue mobile phone and I really like my ancient ‘dumb’ phone but when it comes to replacing it I won’t be able to find one the same.

With the self-driving car I think there might come a time when it will be impossible to get insurance, as the self-driving cars will never hit anything, never cause accidents so if you say you want to drive your own car you won’t be able to afford to insure it because you’ll be worse than the machines.

AR: The rate at which technology becomes normalised is breath-taking but I think it’s really important that we recognise that this has been the same throughout history.

Mobile phones didn’t exist to most of us 15 years ago, unless you were a City type carrying a brick, but my daughter, she’s eight, and when I gave her an old fashioned dial telephone, she didn’t know what it was.

We are very good at utilising technology but at the same time not recognising how useful it is.

What other advances in science are causing you concern?

MS: My other main concern is that, if you’re a teenager today, it’s very hard not to buy into an economy where you are forced to give away your privacy.

If you want to use many of the things that we use online you are giving away lots of data about yourself without even thinking about it and you hope that it is going to be used in a good way.

AR: It does concern me that many of the biggest advances in artificial intelligence and robotics are occurring in closed environments, in private corporations such as Google and Microsoft, because then it becomes proprietary and science works best when it is open and data is shared openly.

For the casual observer, the science in Ex Machina seems very plausible. Is this always the case in films?

AR: Fiction has no obligation to the truth nor to reality.

I don’t like seeing stupid science in films, daft things, especially when they are internally inconsistent within the movie. Interstellar was a particular example of appalling internal inconsistencies in the science.

Ex Machina is a film in which the scientific and philosophical ideas that underlie the drama unfolding and the narrative are as robust as I’ve ever seen in a movie. It’s spot on.

And I’m not just saying that because I worked on the film.

Enjoy the convenience of having The Sunday Post delivered as a digital ePaper straight to your smartphone, tablet or computer.

Subscribe for only £5.49 a month and enjoy all the benefits of the printed paper as a digital replica.

Subscribe